Pundits frequently rail against the left-wing skew of the media. However, systematic evidence is hard to come by. Those quantitative studies that do exist are mainly of the US media. One famous study from 2005 later resulted in a book for the first author, Tim Groseclose (an economist at George Mason University). His book was titled Left Turn: How Liberal Media Bias Distorts the American Mind.

In the original study, Groseclose and Milyo looked at the number of times various thinktanks were mentioned by large US media outlets. The political positions of those thinktanks were then quantified by counting the number of times each one was mentioned by members of the US Congress. Each congressman (or woman) was assigned a political leaning based on his history of voting for or against specific bills. Thinktanks mentioned more often by more left-leaning congressmen were deemed to be more left-wing; those mentioned more often by right-leaning congressmen were deemed to be more right-wing.

By combining the data on mentions of thinktanks by different media outlets, with the data on mentions of thinktanks by different congressmen, the authors were able to estimate the political positions of media outlets without any bias from subjective ratings.

However, most organisations that rate the political slant (left versus right) of different media outlets do rely on subjective ratings. For example, the website Media Bias/Fact Check provides ratings for large media outlets based on the judgements of a small team of editors. Other sites utilise surveys, that is, aggregated perceptions of bias.

This is no doubt more accurate than a single editor’s opinions, but the statistical details are often left opaque. Who rated what? How many ratings were there? How were they aggregated? Unlike some sites, Ad Fontes Media has published a whitepaper describing their methodology. They have a pool of 33 in-house analysts who rate each media outlet in a balanced fashion (i.e., the same outlet is rated by a left-leaning member, a centrist-member and right-leaning member).

Still, all of these organisations deal with the narrow question of which way specific media outlets lean politically. It is well-known that some outlets are much more influential than others. When it comes to getting favorable media coverage, the New York Times counts far more than Fox News or Breitbart. So to estimate the political skew of the media as a whole, one might want to take a weighted average of media outlets’ political positions, with weights corresponding to their relative influence. Such a study could be done, but we are not aware of one.

An alternative way of analyzing media bias is to begin from the ground up, that is, with the journalists themselves. Social scientists have spent decades compiling evidence that political beliefs affect political behavior – a conclusion few people, in any case, would seriously dispute. This suggests that if journalists were found to lean strongly one way or the other, the finding would constitute circumstantial evidence of media bias.

So what do we know about the political leanings of journalists? Well, not that much, unfortunately. There is relatively little academic research on the topic, and most studies focus almost exclusively on the United States. The conservative thinktank Media Research Center has compiled extensive data on the voting patterns of American journalists. They note, “More than half of the journalists surveyed (52 percent) said they voted for Democrat John Kerry in the 2004 presidential election, while fewer than one-fifth (19 percent) said they voted for Republican George W. Bush. The public chose Bush, 51 to 48 percent.” But the US is just one country. Perhaps things are different elsewhere?

Our study began in 2017 when one of us discovered data on the voting patterns of Scandinavian journalists, and shared them on Twitter. Others soon replied with data for other European countries, and a study was set in motion. To obtain data for as many Western countries as possible, we turned to native speakers, and asked them to search newspapers and the grey literature (dissertations etc.) for survey data on journalists’ political views. By teaming up with many such individuals across the world, we were able to find relevant data for 17 Western countries, covering around 150 political parties.

To quantify the political skew of journalists with respect to each party, we matched the survey data with data from the closest national election in the relevant country. This allowed us to compute metrics of how much more or less journalists prefer a given party than the general population of voters. Our two main metrics were: first, the percentage point difference between journalists’ support for the party and the general public’s support for the party; and second, the ratio of support among journalists to support among the public. Each metric has its strengths and weaknesses, and we could not agree on the best metric. Hence we included both.

We also needed data on the political positions of the 150 or so political parties in our dataset. For this, we turned to the parties’ Wikipedia pages, most of which include a small box describing the political position of the party and its ideologies. By web-scraping the data from these boxes, we were able to assign a numerical left-right position to the vast majority of the parties. However, perhaps Wikipedia itself is biased? There is in fact research supporting this.

Wikipedia’s articles are written and edited by the volunteers who work there, many of whom may be left-leaning themselves. (We are not aware of any surveys, despite Wikipedia’s complaints about lack of diversity among their editors). Furthermore, the parties’ Wikipedia pages are based on published work about those parties, and most such work is done by journalists and academics – groups we already know or suspect to be left-leaning.

We therefore recruited 25 research assistants to rate each party’s position on a left-right scale. Each rater was told to consult any source they liked – including social media, news articles, party manifestos – other than Wikipedia (although we obviously could not prevent them from using the site). We thereby obtained a new measure of parties’ political positions, which we compared to Wikipedia’s. Encouragingly, the two were in very strong agreement, with a correlation of .86. The relationship is shown in the chart below:

After this, we were satisfied with the reliability of the political position data, at least regarding the relative positions of parties. We could not be sure their absolute positions had been measured correctly. (For example, although parties labelled “centre” tended to be in the middle of the party distribution, they might not be truly “centrist”).

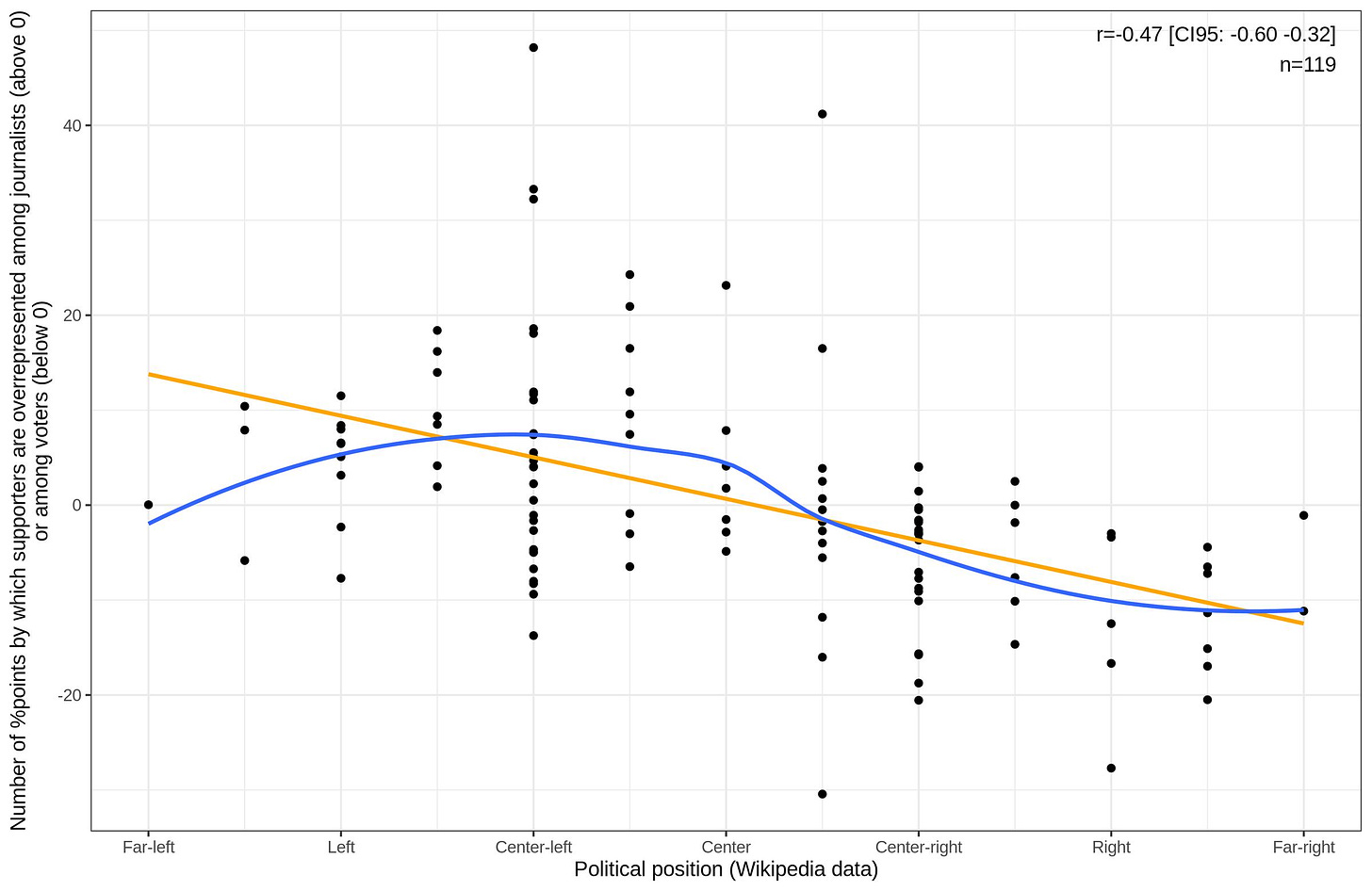

Next, we looked at the relationships between the political positions of the parties, and our measures of journalists’ relative preferences for or against those parties. Since we had two measures of party positions, and two metrics of journalists’ relative preferences, there were four relationships to look at. However, these were all quite similar, so here we only show the relationship between the Wikipedia positions and the percentage point difference metric:

As you can see, there is a clear negative association: compared to the general public, journalists tend to prefer parties that are more left-wing overall. However, the pattern is somewhat nonlinear, such that journalists’ relative preference for far-left parties is weaker than their relative preference for center-left parties. Indeed, the average political position of journalists appears to be “left to center-left”.

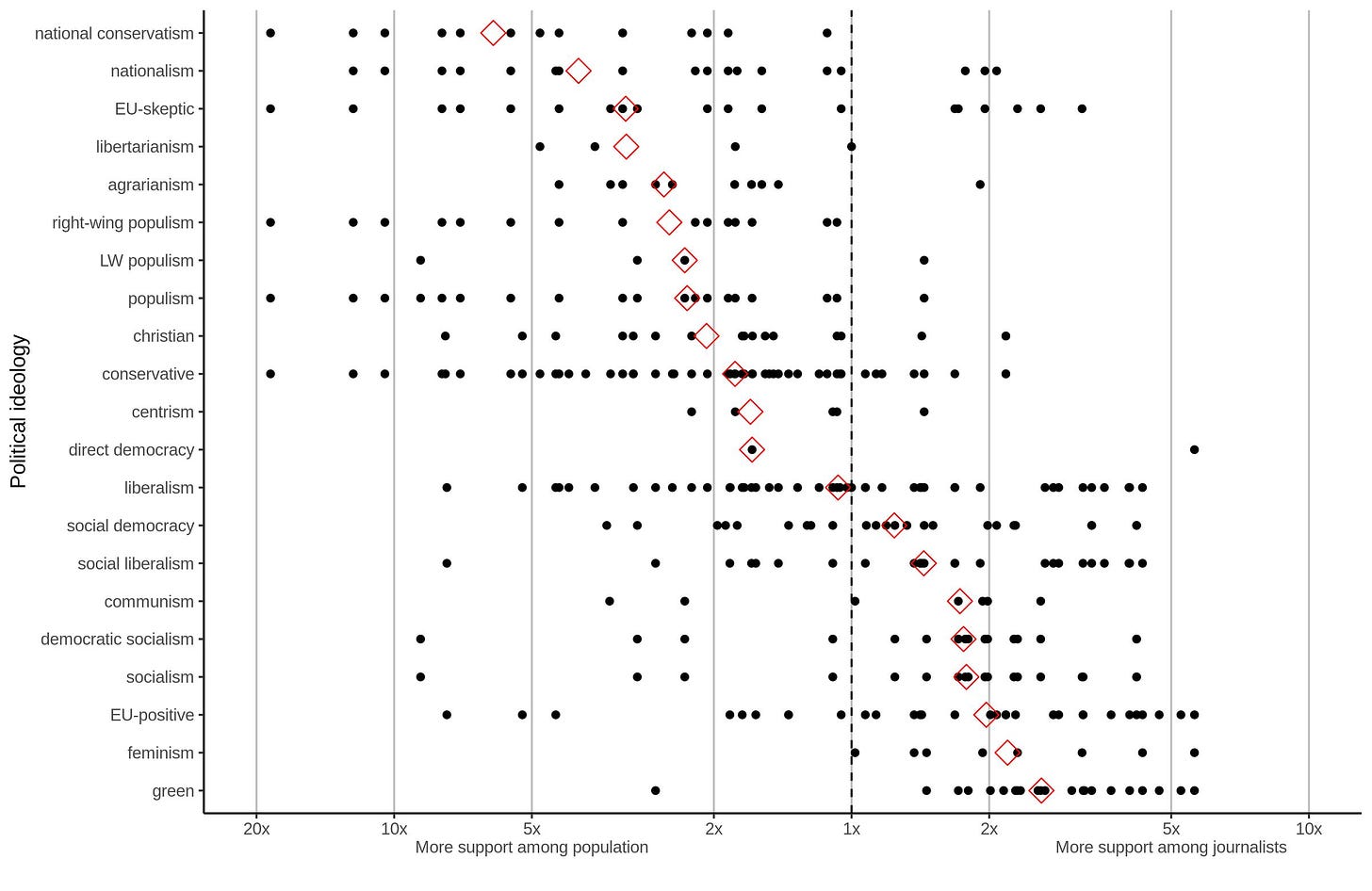

But what is it about “left to centre-left” parties that journalists’ find appealing? Using the information given on Wikipedia, we were able to assign ideologies to parties in a fairly consistent manner across countries. Some parties were tagged as EU-positive, others as anti-immigration; some as conservative, others as social democratic. This allowed us to compute journalists’ relative preference for each ideology, based on the positions of the parties. Results are shown in the chart below (red diamonds are the weighted medians):

Our findings are not particularly surprising. Compared to the general population, journalists prefer parties that are green, feminist and pro-EU, and they tend to dislike parties that are nationalist, national conservative and Euroscecptic. There is also a slightly weaker tendency for journalists to prefer traditionally left-wing parties, i.e., those that espouse socialism or social democracy.

We attempted to identify the strongest associations using a method called Bayesian Model Averaging, which is popular among economists. The details aren’t worth getting into, but it’s essentially a more advanced version of multiple regression that is useful in situations where there are few datapoints (about 120 parties, in our case) relative to the number of explanatory factors (about 20, in our case). Results showed that the best predictors of journalists’ relative support for a party were whether that party was green (positive), conservative (negative), or nationalist (negative).

Overall, we observed that Western journalists tend to have more left-liberal views than their fellow citizens. But how much does this realistically affect their work? We ask the reader to imagine a newsroom where only Trump supporters work. How would the personnel in this newsroom deal with, for example, questions about the events of January 6th, 2020 (variously characterised as a riot, a storming, or an insurrection at the US Capitol)?

No doubt they would be much less inclined to blame Trump, perhaps because a large number of them would believe that he actually won the presidential election. This scenario might seem far-fetched, and it is, because there are few if any such newsrooms. However, there are many newsrooms without a single Republican voter. Slate magazine (which leans left) carried out a survey of their employees, and found that 0% supported Trump in 2020. Does anyone really believe that such a newsroom would cover stories unfavourable to Biden – such as his son’s alleged corruption and drug abuse – in an unbiased way?

But we don’t have to rely on thought experiments. There's at least one published study (from 1996) in which journalists were asked to make hypothetical decisions in a newsroom to see whether their beliefs would have any influence. The authors sampled journalists form five countries (the United States, Great Britain, Germany, Italy, and Sweden). Those who took part were asked to decide things such as which headline to use for an article, and which picture to display.

The authors concluded, “In all five countries, there is a significant correlation between journalists’ personal beliefs and their news decisions. The relationship is strongest in news systems where partisanship is an acknowledged component of daily news coverage and is more pronounced among newspaper journalists than broadcast journalists, but partisanship has a modest impact on news decisions in all arenas of daily news, even those bound by law or tradition to a policy of political neutrality.”

We emailed the surviving author of the study, Thomas E. Patterson (professor of Government and the Press at Harvard Kennedy school), whether there were any replications of the study. He told us, “I don’t believe there’s been a replication.”

To be biased is to be human. And indeed, recent studies suggest that liberals and conservatives are about equally biased (although their biases tend to manifest in different domains). Polarisation in the US media has increased significantly over the last ten years, particularly since the start of the Great Awokening and the election of Donald Trump. As a result, norms of impartiality that once existed on both sides of the aisle have been eroded. Exactly how to deal with this situation going forward remains an open question.

Image: The New York Times Building in New York, 2007

Thanks for reading. If you found this newsletter useful, please share it with your friends. And please consider subscribing if you haven’t done so already.

This paper makes an effort to quantify journalists' political orientation: https://www.science.org/doi/10.1126/sciadv.aay9344